Fixing Plunger Lifts with Ai and better Data

Importance of High-Quality Data for Plunger Lift Optimization

High-quality data is the foundation of effective AI optimization in plunger lift systems. In oil & gas wells, “garbage in” leads to “garbage out” – AI models can only be as insightful as the data they learn from. Reliable, granular data enables informed decisions and helps identify inefficiencies in lift operations. For example, ConocoPhillips developed a Plunger Lift Optimization Tool (PLOT) that increased gas production by up to 30% in over 4,500 wells by leveraging more frequent and detailed well data. Poor data quality, on the other hand, can mislead AI algorithms – resulting in suboptimal control of lift cycles, missed production opportunities, or even damaging equipment due to incorrect adjustments.

Quality data directly impacts performance and efficiency. With accurate real-time readings, AI can fine-tune plunger lift cycle times (shut-in vs flow periods) to maximize output without human guesswork. One OPX Ai case saw a 20% production increase and 30% less downtime after deploying AI-driven optimization, thanks largely to continuous monitoring and data-driven adjustments. Good data also enhances safety and compliance – automated systems can reduce venting and manual interventions if they have trustworthy sensor inputs. In contrast, many plunger lift installations today run below potential due to “suboptimal configurations…and a lack of advanced monitoring”, meaning data gaps leave operators “flying blind”.

Another crucial benefit of high-quality data is predictive maintenance. AI algorithms can detect subtle trends in vibration, pressure, or cycle timing that precede equipment failures. Catching these early prevents costly shutdowns. OPX Ai emphasizes that reliable data underpins predictive analytics – analyzing quality data allows forecasting of equipment wear and maintenance needs, minimizing unplanned downtime. Conversely, if key failure indicators aren’t measured or are lost in noise, the AI can’t warn operators in time. As a Schlumberger expert notes, many early efforts to predict failures (like ESP pump breakdowns) “jumped into ML/AI when the data contained only a small fraction of the answer.” If the main root causes are mechanical but you only measure basic pressures, the model is unlikely to see the issue. In short, complete and accurate data is essential for AI to optimize lift performance, improve efficiency, and anticipate maintenance needs.

Ensuring Good Data Quality: Key Principles

Achieving high-quality data for AI in artificial lift systems requires attention to data frequency, compression/management, and sensor placement. These principles ensure the AI is fed data that is relevant, rich in detail, and reliable.

Data Frequency: Optimal Sampling Rates

Choosing the right data sampling frequency is critical. The sampling rate should be high enough to capture the dynamics of plunger lift cycles and any transient events, but not so high that it overwhelms storage or communication systems without added benefit. High-resolution data provides a more detailed “movie” of well behavior rather than just sparse “snapshots”. For example, ConocoPhillips found that increasing sensor readings from once per hour to every 30–60 seconds was key to optimizing plunger lifts – this higher frequency data allowed engineers to spot which wells were flowing efficiently and which were loading up with liquids. With more frequent pressure and temperature data from different parts of the well, patterns like early liquid accumulation or suboptimal shut-in duration became evident in real time, enabling timely adjustments.

Trade-off considerations: Very high-frequency data (e.g. sub-second) can capture fine details but generates large volumes. In plunger lift monitoring, a typical SCADA polling rate might be on the order of 1 minute intervals, which is considered “high-frequency” in field operations. This usually suffices to track plunger arrivals, casing/tubing pressures, and flow rates through each cycle. In specialized diagnostic cases, even higher rates are used – for instance, research shows that 30 Hz (30 readings per second) data acquisition can record the acoustic pulses of a plunger traveling in the tubing. Such ultra-high resolution is valuable to analyze plunger motion or detect anomalies (e.g. plunger strikes at tubing collars), but it’s rarely needed continuously for every well.

The optimal sampling rate balances insight versus cost. Practical recommendations: Start by matching the frequency to the process’s speed. Plunger lift cycles often last on the order of minutes, so logging key pressures every 30–60 seconds typically captures the important fluctuations throughout a cycle (build-up, arrival, afterflow). Important events like plunger arrival can also be recorded as timestamped events (e.g. a sensor trip) in addition to periodic samples. In general, err on the side of higher frequency when in doubt – you can always aggregate or filter high-res data, but you cannot recover events that were never recorded. Many operators are moving toward event-driven and higher-frequency data capture because it reveals issues that low-frequency logging would miss. As one field engineer put it, trying to optimize with one reading per hour is like seeing snapshots, whereas per-minute data lets you watch the full story unfold in motion.

Data Compression and Management

High-resolution and multi-sensor data streams from artificial lift operations can quickly become massive. Effective data compression and management practices are needed to store and transmit large datasets without losing the insights hidden in the details. The goal is to reduce data volume while preserving critical information (trends, anomalies, peaks).

Modern systems address this by using both smart compression algorithms and data architecture strategies:

Time-series databases & built-in compression: Many SCADA historians (OSIsoft PI, etc.) and IoT platforms use compression techniques that log a new data point only when the value changes beyond a certain threshold. This “exception-based” logging ensures that small oscillations or noise are compressed out, while real shifts are retained. For example, a pressure might be recorded every second during a rapid buildup, but if it plateaus, the system can log just the flat line segment with start/end values, greatly compressing the data. Such methods store the shape of data without needing every raw sample, saving space while keeping important events.

Downsampling and aggregation: A practical approach is to store two tiers of data frequency – keep the full high-frequency data for a rolling window (say the last few weeks for detailed analysis), but archive older data at a lower resolution (e.g. 1 data point per 10 minutes for long-term history). This ensures long trends and seasonal patterns are available without the full granularity of every second, which might no longer be necessary months later. Aggregating data into hourly or daily statistics (min, max, average, etc.) also provides valuable summaries for AI models to catch long-term drifts, while cutting storage needs.

Edge processing and event tagging: Instead of streaming every raw reading to the cloud, edge devices (wellsite RTUs or controllers) can run simple algorithms to detect events or anomalies. For instance, the controller can locally detect a plunger arrival or a pressure surge, then send an event log and a short high-frequency snippet around that event, rather than continuously sending high-speed data. This event-based data capture focuses on meaningful changes. In fact, event-driven recording has enabled artificial lift surveillance to evolve from just knowing roughly how often issues occur to measuring precise durations of downtime per event type and even automatic event detection. In other words, by compressing “time between events” and richly recording the event moments, one doesn’t lose insight – instead, one gains a clearer picture of each significant incident without bogging down the database with idle data.

Managing the deluge of data also involves robust infrastructure. In ConocoPhillips’ digital oilfield initiative, connecting hundreds of wells led to “orders of magnitude more data” being collected, so they invested in a private radio/Wi-Fi network to transmit this data to central servers where it is “cleaned up, analyzed and stored”. They even piloted big-data technologies (like Hadoop clusters and in-memory databases) to cope with the scale. The best practice here is to plan for scalability: as you add sensors or increase frequency, ensure your network and storage can handle the throughput. Use compression and intelligent filtering to reduce payload (for example, transmitting 1-minute averages in real-time, but storing 1-second data locally and only sending it on demand or when anomalies are detected).

Finally, data quality monitoring is part of data management. Compression should never introduce bias – always verify that compressed/filtered data still represents the reality. Outlier detection and validation checks should run on incoming data to catch any sensor errors (e.g. a stuck sensor reading constant values or spikes due to electrical noise). Continuous data quality tools can fill small gaps (interpolate missing data) or flag suspect readings for review. In summary, compress aggressively but intelligently: retain the fidelity needed for AI insights, and use domain knowledge (what patterns matter for plunger lifts) to guide what can be safely compressed or omitted.

Sensor Placement: Key Locations for Useful Data

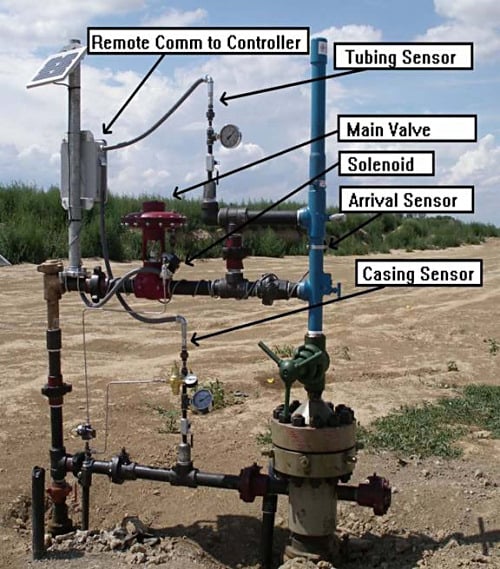

The old adage “measure what matters” applies strongly to artificial lift systems. Strategic sensor placement ensures that all relevant aspects of the plunger lift operation are monitored. In a typical plunger lift well, the minimum critical sensors are: casing pressure, tubing pressure, and a plunger arrival sensor at surface. Casing and tubing pressures indicate the buildup of energy (gas) in the well and the back-pressure from liquids – these govern the plunger’s behavior and are essential for optimization algorithms. A plunger arrival sensor (often a magnetic or acoustic switch in the wellhead lubricator) confirms that the plunger has reached surface each cycle, providing ground truth for cycle timing and an important safety interlock for the controller. Without an arrival sensor, the system would be “blind” to a plunger stuck downhole or arriving late, which could lead to improper cycle resets or even damage.

Additional useful sensors include: flow rate (gas production rate) either per well or per pad – this helps AI gauge how each plunger cycle contributes to output; pressure/temperature at multiple points – ConocoPhillips, for instance, installed extra pressure and temperature sensors at different depths and flowline segments to calculate differentials and identify inefficiencies. In plunger lift operations, measuring both tubing and casing pressure is important: the difference between them at key moments can indicate liquid loading or an inefficient lift. Some operators also use acoustic sensors or fluid level sensors to detect liquid levels in the wellbore. For example, a microphone at the wellhead can “hear” the plunger traveling (through acoustic pulses) and even detect when it hits liquid versus gas, as demonstrated in field experiments. These specialized sensors can enhance an AI’s ability to diagnose problems like partial plunger stalls or insufficient shut-in pressure.

It’s also important to consider sensor reliability and calibration – sensors should be rated for the well conditions (pressure, temperature, and sour service if applicable) and regularly calibrated. A mis-calibrated pressure sensor feeding an AI could cause the model to optimize to a wrong setpoint. Hence, placing redundant sensors or periodically validating readings (e.g. against downhole gauges or manual measurements) is a good practice for critical parameters.

In other artificial lift systems (beyond plunger lifts), the principles of sensor placement are similar: identify key performance and health indicators and instrument them. For an ESP (Electric Submersible Pump), one would monitor intake pressure, discharge pressure, motor temperature, vibration, and electrical parameters (current, frequency) – as these can signal pump stress or declining reservoir pressure. If trying to use AI to predict ESP failures, you’d ensure high-frequency sampling of electrical and vibration data, because a failure often gives its first hints in those signals. For a gas lift system, critical sensors include casing injection pressure, tubing pressure, produced flow rates, and valve status – an AI can then optimize injection rates if it has a clear view of how injection pressure affects production. The overarching rule is to cover all sides of the equation: for any artificial lift method, give the AI visibility into the inputs (controls) – e.g. valve positions, cycle times – and the outputs or results – e.g. flow rates, pressures, fluid levels. Only with a full picture can machine learning algorithms discern cause and effect to optimize the process.

Recommendation: When designing sensor layouts, consult domain experts (OPX Ai) to identify failure modes or inefficiencies and ensure there’s a sensor watching for each. If a certain variable is not measured, the AI will be blind to that aspect. As one Schlumberger advisor put it, always ask “is the answer to your problem contained in your data?”. If not, consider adding the appropriate sensor or measurement so the AI isn’t solving a puzzle with missing pieces.

How OPX Ai Enhances Data and Optimization for Artificial Lift

OPX Ai is an engineering firm specializing in AI-driven solutions for oil and gas operations, and they place a strong emphasis on data acquisition and quality as the bedrock of optimization. Our approach to artificial lift (including plunger lifts) integrates advanced data handling with domain expertise to yield better performance:

Integrated Data Platforms: OPX Ai connects to existing SCADA systems, historians, and IoT sensor networks to create a unified data hub in real-time. By breaking down data silos, they ensure that the AI algorithms have access to all the relevant streams – pressures, flows, sensor alarms, etc. This central platform continuously ingests, cleans, and organizes data from multiple wells, providing a single source of truth. It also enables an “operate-by-exception” model: with live dashboards and alerts, engineers can focus only on wells that need attention while the AI monitors everything else in the background.

AI-Driven Analytics for Optimization: OPX Ai leverages machine learning on the historical and real-time data to determine optimal setpoints and control strategies. For plunger lifts, their AI models analyze past cycles and current conditions to predict the best shut-in duration, flow time, and plunger travel speed for each well. For example, the AI might learn that a particular well starts loading liquid after 45 minutes of flow, and thus recommend a shut-in just before that point to maximize production without killing the well. These insights are fed into the control logic so that plunger cycles dynamically adjust to changing reservoir conditions (e.g. declining pressure or seasonal temperature changes). OPX Ai’s expertise lies in combining domain rules-of-thumb with AI predictions – essentially augmenting traditional plunger lift controllers with a smarter brain that continuously optimizes parameters. In one instance, their system advised tweaks to plunger cycles in a liquid-loaded gas field, resulting in a sustained production uplift (20% as noted earlier).

Enhanced Data Quality and Monitoring: Understanding that data integrity is vital, OPX Ai provides tools to improve data quality in client operations. They assist in optimizing SCADA configurations (like ensuring polling rates are adequate and deadbands not too large) and OSIsoft PI data quality settings. They also help implement server monitoring and redundant data collection so that data streams are robust (no data loss due to outages). According to OPX Ai, good data management practices – defining data standards, validating sensor data, and establishing governance – are crucial to “driving operational excellence”. By enhancing data reliability, they enable their AI algorithms to trust the inputs and thus make correct recommendations. One OPX Ai client testimonial noted that OPX’s anomaly detection (an AI application) was only possible after improving data quality and monitoring, which then “directly enhanced production efficiency and reduced operating costs”.

Predictive Maintenance and Anomaly Detection: OPX Ai’s platforms apply predictive analytics to the collected data to flag deviations or degrading trends. For plunger lifts, this might mean identifying a gradual increase in plunger travel time or a drop in peak casing pressure over several weeks – early signs of well slugging or plunger wear. Their system can alert operators to these issues before they become failures. In a broader artificial lift context, OPX Ai uses AI models to detect anomalies in pump vibration signatures, motor current patterns, etc., enabling proactive maintenance scheduling. This reduces unplanned downtime and avoids catastrophic failures by fixing problems at the “predict and prevent” stage rather than after-the-fact. One OPX Ai deployment in an Integrated Operations Center helped monitor 150+ wells remotely, using AI to sift through the data and only alert engineers when something truly needed intervention – a huge efficiency gain for field teams.

Continuous Improvement Loop: By combining human Subject Matter Experts (SMEs) with AI, OPX Ai makes sure that the data insights translate to practical field actions. They often start by auditing the existing data: are sensors placed correctly? Is the sampling frequency sufficient? They then fill any gaps (for instance, recommending an upgrade to a higher resolution pressure sensor or adding a flow meter on a well) so that the AI has the needed inputs. Once the AI control is in place, OPX Ai continues to track performance and gather more data, which in turn is used to retrain or fine-tune the models. This virtuous cycle means the optimization gets better over time as more high-quality data becomes available. Essentially, OPX Ai doesn’t just drop in an algorithm – they help clients build the data infrastructure and culture to sustain AI-driven optimization long-term.

Notably, OPX Ai recognizes that data overload without context is a missed opportunity. They stress converting the “flood of data from SCADA and sensors” into actionable insights. In practical terms, they implement meaningful visualizations and reports (like plunger cycle dashboards, well performance scorecards) so that engineers and operators can trust and act on the AI’s recommendations. By improving data acquisition and processing, OPX Ai ensures that operators spend less time wrestling with raw data and more time making decisions that boost production and efficiency.

Key Insights and Practical Recommendations

“Good Data = Good AI”: High-quality data is indispensable for AI-driven optimization of artificial lift. Ensure your data is accurate, timely, and relevant to the phenomena you’re trying to control. In plunger lifts, that means capturing the full picture of each cycle (pressures, flow, plunger arrival) and maintaining that data integrity. Companies have seen double-digit production gains by investing in better data collection and analytics, so this is a proven avenue to value.

Optimize Data Frequency: Match your data sampling to the dynamics of your well. Use higher-frequency sampling (seconds or minutes) to catch rapid events like plunger arrivals or pressure surges; use event triggers for very fast phenomena. Avoid overly sparse data – if you sample too slowly, you might miss critical build-up or blowdown events and your AI will be guessing. A good rule of thumb is to sample at least several times within the duration of key process phases (e.g. dozens of points during a plunger cycle). Monitor the system and adjust – if the AI or operators still can’t “see” an issue, you may need to increase frequency or add an event sensor.

Employ Smart Data Reduction: Implement data compression techniques that filter noise but not signal. Use the compression features of your SCADA/PI system to reduce redundant points. Archive raw high-res data short-term and summarized data long-term. Most importantly, design what to record with domain knowledge: for example, always log the max casing pressure achieved each cycle and the time of plunger arrival – these are gold nuggets for optimization. By contrast, a constant pressure reading adds little value, so let your system compress it until it changes. Regularly review data logs and verify that no important transients are getting lost due to compression settings.

Strategic Sensor Deployment: You can’t optimize what you don’t measure. Equip wells with a baseline sensor suite that covers all critical variables of the lift system. For plunger lifts, at minimum use tubing and casing pressure sensors and a plunger arrival detector. Consider adding flow measurement per well if feasible, as it greatly aids optimization and diagnostics (even a periodic portable flow test is better than nothing). If certain problems persist (e.g. fluid fallback, paraffin buildup), add specialized instrumentation like acoustic sensors or temperature gauges to track those conditions. Each sensor should have a clear purpose – if it doesn’t drive a decision or input to the AI, reassess its necessity.

Data Quality Maintenance: Treat data as an asset. Implement validation rules: e.g., if tubing pressure flatlines at an exact value for hours, flag it for sensor check; if values go out of plausible range, exclude or investigate. Train field personnel in the importance of sensor upkeep (calibration, cleaning, timely replacement if faulty). Also, maintain time synchronization across data sources – misaligned timestamps can confuse AI models. Governance is key: assign responsibility for data quality checks, perform periodic audits on data completeness, and use monitoring tools (as OPX Ai suggests) to ensure your data pipeline is healthy.

Leverage Expertise with AI: A recurring theme is that pairing domain knowledge with AI yields the best results. Use engineers’ intuition to guide the AI model development – e.g. tell the data scientists which pressure differential indicates a loaded well, or what a normal vs abnormal plunger arrival time is. This helps in feature selection and labeling for machine learning. In operations, trust but verify the AI’s suggestions; use human insight especially in novel situations that fall outside the training data. Over time, as the AI proves itself, you can automate more decisions. OPX Ai’s success in optimization comes from deeply understanding both the physics of lifts and the power of algorithms – emulate that integrated approach.

Start Small, Scale Up: It’s often wise to pilot AI optimization on a subset of wells or a single field, ensuring the data collection and analysis loop works smoothly. Iron out integration issues (connecting to legacy SCADA, cleaning historical data, etc.) on a small scale. As you demonstrate gains – e.g. fewer plunger stalls, increased production, reduced truck rolls for well checks – use those wins to justify scaling to more wells. Keep stakeholders informed with clear metrics (production uplift, downtime reduction, $/year saved on maintenance) attributable to the AI and data improvements. This builds the case for further investment in high-quality data infrastructure across your operations.

In conclusion, high-quality data is the lifeblood of AI-driven optimization for plunger lifts and other artificial lift systems. By paying attention to how data is gathered, compressed, and utilized – and by partnering with experts like OPX Ai who specialize in these practices – operators can unlock significant performance improvements. The combination of robust data and advanced AI turns previously manual, reactive operations into efficient, automated, and proactive ones. Wells produce more, run smoother, and surprise us less often with failures. The path to that outcome starts with a commitment to data excellence at the wellhead, the network, and the analytics platform. As the industry saying goes, “In God we trust; all others, bring data.” – now we would add: “…and make sure it’s good data!”

OPX AI is an engineering services company that helps organizations reduce their carbon footprint and transition to cleaner and more efficient operations.